Machine learning is all about making predictions and decisions based on data acquired from diverse sources. But how do we know if our decisions are well-informed and based on structured data? Since the pretext looks for precision decision-making, this is where entropy, an elusive concept from information theory, comes into play. Entropy in machine learning assists in measuring uncertainty or disorder in available data, guiding and eventually making smarter decisions at the ML model’s end.

To state the exceptional advantages, from decision trees to probabilistic models, entropy plays an essential role in structuring how machines learn. Understanding of entropy information theory is not common knowledge and requires specialization. However, it becomes imperative to comprehend the topic in order to make decisions with data analytics in the context of businesses and how machine learning and the subsequent elements work.

Since curiosity has brought us to entropy, we will be going in-depth to recognize the other aspects of upturning the businesses with its real-world applications.

What is Entropy in Machine Learning?

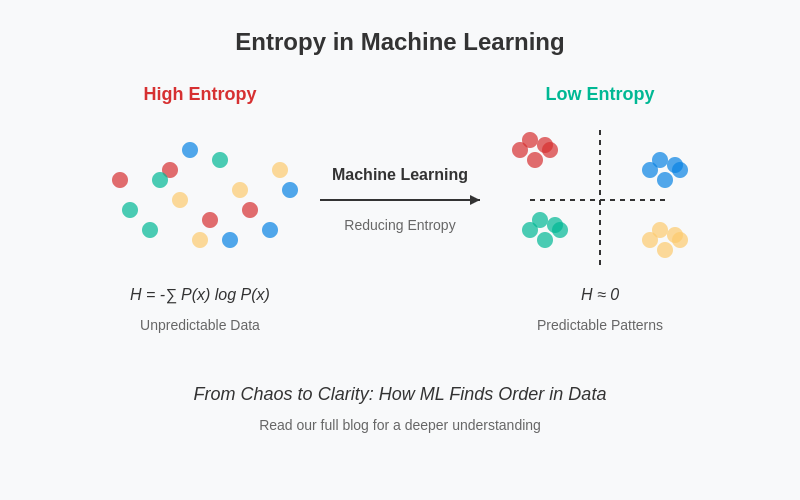

Coming to the core, entropy in machine learning is a measure of disorder or uncertainty in a large dataset available for the model. If a dataset has a high level of disorder, it means the outcomes are uncertain, and more information is needed to make an accurate prediction.

For focused understanding, let us break down the concept of entropy meaning in machine learning with an example that can simplify it.

For an ecommerce store, predicting whether a visitor will make a purchase can be achieved through AI-driven customer behavior analysis. By leveraging ML models with software product engineering, businesses can analyze real-time user interactions, browsing history, and engagement patterns to estimate the likelihood of conversion. The entropy is high if you only have data on their age, and the purchase behavior varies widely across different age groups. However, the entropy decreases with additional information, such as browsing history, location, and past purchases, because you can make more confident predictions.

This way, you can diversify the groups and the data based on information with the decision tree, leading to a low entropy and bringing a clear understanding. A decision tree in machine learning is a supervised learning algorithm used for classification and regression tasks.

Why Does Entropy Matter?

- Higher entropy: More uncertainty, more challenging to classify available unstructured data

- Lower entropy: More predictability, more straightforward to classify the structured data

Entropy in data science assists machine learning models with data intelligence in organizing and refining datasets, leading to better decision-making. It’s advantageous in decision trees, where it helps determine the best way to split data for classification.

What is the Origin of Entropy Information Theory?

Claude Shannon’s groundbreaking 1948 paper, A Mathematical Theory of Communication, laid the foundation for entropy information theory, a field that modernized how the general masses apprehend data, uncertainty, and communication. His work wasn’t just about abstract mathematics. It was born from a real-world problem on how to quantify lost information in phone line signals.

To bring forth the public with a solution, Shannon introduced the concept of information entropy, a measure of uncertainty and randomness in data. To bring the concept in plain words, if you already know what someone is going to say, there’s a little surprise and, thus, low entropy. However, if their message is unpredictable, it carries high entropy, meaning it contains more information.

Pointing at the core foundation, entropy assists the models in comprehending how much disorder exists in a large dataset. With entropy and uncertainty in predictions for machine learning, this principle is invaluable. To be precise, in algorithms like decision trees, where entropy determines the most straightforward way to split data for optimal data and information classification.

However, the impact doesn’t stop there. Information theory is deeply embedded in data compression, cryptography, and AI-driven predictions. This restructures how we store, transmit, and interpret information today with intelligent and user-centric models.

In short, Shannon’s entropy and information theory isn’t just a theoretical curiosity. It is becoming the backbone of modern entropy data science. By maneuvering through entropy, we unlock smarter algorithms, better decision-making, and a world where machines understand patterns with astonishing accuracy.

What is the Entropy Formula in Machine Learning?

Entropy in machine learning is a fundamental concept used to measure uncertainty or impurity in a dataset. It plays a crucial role in decision tree algorithms, where it helps determine the best feature to split data for optimal classification. Derived from information theory, entropy quantifies the randomness in a system, where higher entropy indicates a more significant disorder and lower entropy signifies a more organized dataset. Machine learning models can make data-driven decisions by leveraging entropy, improving predictive accuracy and efficiency. Understanding the entropy formula is essential for grasping how decision trees and other machine learning algorithms manage uncertainty to enhance performance.

Shannon’s Formula for Entropy

The entropy of a random variable X is calculated as:

H(X)=−∑p(x)log2p(x)

Where:

- H(X)= entropy of a system

- p(x)= probability of an event x occurring

- log2p(x)= information content of x

Suppose you are flipping a fair coin. The entropy would be:

H=−(0.5log20.5+0.5log20.5)=1

This means the uncertainty is high (since the outcome is unpredictable). However, if we use a biased coin where heads occur 90% of the time, entropy would be lower, meaning the result is more predictable.

In machine learning, the entropy calculation concept translates into how uncertain a dataset is before making a decision.

What is Entropy Based Decision Making in Machine Learning?

Talking about entropy based decision making in machine learning, few algorithms are as easy to grasp and as powerful as decision trees. These reliable decision tree models mimic ways how humans make decisions, breaking down complex problems into a series of logical steps. To state that it can be more like humans, you’re classifying emails as spam, approving bank loans, or predicting customer churn, decision trees provide a structured, easy-to-interpret way to arrive at a decision.

How Decision Trees Work With The Entropy of a System

Think of an entropy formula decision tree as a flowchart that asks a series of yes/no questions, systematically narrowing down the possibilities until it reaches a final answer. You must wonder what is the goal behind this flowchart. It is to divide the data into smaller, more homogeneous groups, making predictions more accurate and bringing it into a structured form.

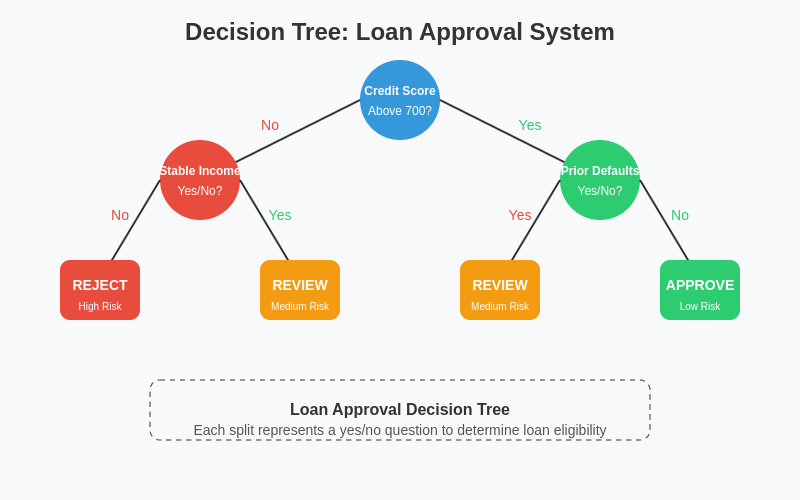

Let’s take a real-world example: loan approvals in a bank.

Suppose you’re building an intelligent system with AI model development that decides whether an applicant qualifies for a loan. The decision tree might ask questions like this to reduce the entropy of a system:

- Is the applicant’s credit score above 700?

- Does the applicant have a stable income?

- Has the applicant defaulted on loans before?

Each of these questions splits the dataset into groups based on the response. Applicants with a credit score above 700 might be more likely to get approved, while those below may require further checks. At every step, the model reduces entropy, essentially cutting down uncertainty, by focusing only on the most informative features.

Different Components of an Entropy Formula Decision Tree

Now that we comprehend how entropy formula decision trees essentially work, it would be better to dive into their different components. Each part is crucial in systematically reducing entropy and guiding the model toward an accurate decision.

1. Root Node

The machine learning development model assesses and evaluates the appropriate feature to split based on entropy and information gain to create meaningful divisions.

For instance, the root node might check the applicant’s credit score. A score above 700 may indicate low risk, while a lower score could require further analysis. Over here, the entropy is 1.

2. Decision Nodes

These internal nodes represent points where the data gets divided based on a condition. Each decision node refines the dataset, reducing uncertainty and making the prediction more precise. Each question further narrows down the dataset, reducing entropy with every step.

3. Branches

Branches connect the nodes and represent the possible answers (Yes/No, True/False) at each decision point using entropy for feature selection. They guide the data down different paths depending on the applicant’s attributes. If an applicant has a stable income, they might proceed down a “Likely to be approved” branch. If not, they might be directed toward further checks.

4. Leaf Nodes

Leaf nodes are where the decision tree stops—these nodes represent the final classification or decision. At this stage, the model has gathered enough information to make a conclusive prediction.

What is the Importance of Entropy in Algorithms?

According to a McKinsey study, businesses that rely on data-driven decision-making improve efficiency by 20%. Entropy-driven models contribute to this transformation by enabling organizations to make better predictions, automate smarter decisions, and augment industry performance.

1. Driving Smarter Decision-Making

Machine learning models thrive on structure, and entropy in machine learning provides just that by reducing uncertainty in data. The lower the entropy, the clearer the pattern—allowing models to classify information more effectively.

Example: In a fraud detection system, a model using entropy can quickly differentiate between a normal transaction (low entropy) and a suspicious transaction (high entropy), leading to faster fraud prevention.

2. Feature Selection

Not all features in a dataset are equally valuable. Entropy helps identify the most informative features, those that provide the greatest reduction in uncertainty, allowing models to focus only on what truly matters.

Example: In building a loan approval system with artificial intelligence development, credit score might have the highest information gain, making it a primary splitting criterion in a decision tree using entropy for feature selection.

3. Preventing Overfitting with Smarter Pruning

One of the biggest challenges in machine learning is overfitting, where a model becomes too complex and learns noise instead of patterns. Entropy-based pruning assists in simplifying decision trees by eliminating branches that don’t contribute to better predictions, keeping models generalized and efficient.

Example: If a decision tree for spam detection overanalyzes rare words that appear only once, entropy-based pruning will remove those unnecessary splits, ensuring the model remains robust.

4. Powering Deep Learning with Cross-Entropy Loss

Entropy in deep learning plays a major role in neural networks, particularly classification tasks. Cross-entropy loss helps measure how far a model’s predicted probabilities deviate from actual labels, enabling better training and improved accuracy.

Example: In image recognition, cross entropy in machine learning loss ensures that a deep learning model correctly classifies images by adjusting weights based on uncertainty reduction.

How is Information Gain in Machine Learning Relevant to Entropy?

Think of information gain in machine learning as the guiding light in decision-making. It tells us which feature cuts through the noise and brings clarity. The goal? Reduce uncertainty (entropy) and make smarter splits. Simply put, the bigger the IG, the better the split.

The formula for Information Gain in Machine Learning:

Example: Loan Approval System

Let’s say you’re building a model that keeps the entropy in data science mind when deciding loan approvals. You have three features:

| Feature | Usefulness for Splitting | Entropy Reduction | Information Gain (IG) |

| Credit Score | A strong indicator of creditworthiness | High | High |

| Employment Status | Somewhat useful but not decisive | Moderate | Moderate |

| Email Address | Irrelevant for loan decisions | None | Zero |

In short, information gain in machine learning and entropy work hand in hand. One finds uncertainty, and the other wipes it out. That’s how machine learning makes sharp, data-driven decisions.

Use of Entropy and Uncertainty in Predictions for Diverse Industries

It is obvious that different industries make use of entropy in machine learning in the most beneficial way possible. Their requirement would lead to a difference in applications of entropy in ML. To depict a few, we present to you a table with a detailed understanding of these uses.

| Industry | Application of Entropy | Impact on Predictions |

| Finance | Fraud detection & risk assessment | Reduces false positives in fraud detection |

| Healthcare | Disease diagnosis & prognosis | Improves accuracy in predicting illnesses |

| E-commerce | Personalized recommendations | Enhances user experience with better suggestions |

| Cybersecurity | Threat detection & anomaly analysis | Entropy in cybersecurity identifies breaches faster |

| Manufacturing | Predictive maintenance | Prevents unexpected equipment failures |

How Does a Machine Learning Development Company Assist in Entropy-Based Decision-Making?

A machine learning development company revolutionizes entropy-based decision-making, turning uncertainty into actionable insights! By leveraging information gain in decision trees and cross-entropy loss in deep learning, they supercharge fraud detection, risk assessment, and predictive analytics, helping businesses stay ahead of the curve.

From fortifying cybersecurity to optimizing financial strategies and enhancing healthcare diagnostics, these companies infuse AI with entropy-driven intelligence. By detecting anomalies, minimizing risks, and sharpening predictions, they empower businesses to make smarter, faster, and more confident decisions, driving success like never before.

Partner with Experts

Frequently Asked Questions

How does entropy prevent overfitting?

Entropy helps prevent overfitting by ensuring that models generalize well to new data rather than memorizing patterns from the training set. In decision trees, for example, information gain (based on entropy in machine learning) guides optimal feature selection, preventing overly complex trees that fit noise instead of meaningful trends. By using entropy-based regularization techniques, models maintain a balance between learning patterns and avoiding excessive complexity, improving real-world performance.

How is entropy used in natural language processing (NLP)?

In NLP, entropy helps in tasks like language modeling, text classification, and information retrieval. Cross-entropy loss is commonly used to train neural networks for text generation and speech recognition, ensuring models predict words with high accuracy. Additionally, entropy-based techniques help assess the informativeness of words in text summarization and optimize token selection in machine translation and chatbots.

What are the challenges that come when working with entropy in machine learning?

While entropy is a powerful tool, it comes with challenges like computational complexity, sensitivity to noise, and difficulty in high-dimensional data. Calculating entropy for large datasets can be resource-intensive, and small variations in data can lead to misleading entropy values. Additionally, in some cases, entropy alone may not be sufficient, requiring hybrid approaches with mutual information or cross-entropy techniques for more robust decision-making.

What is the role of joint entropy in model interactions?

Joint entropy machine learning measures the combined uncertainty of two or more variables, capturing their interdependence. It plays a crucial role in model interactions, particularly in feature selection, information gain, and mutual information calculations. By quantifying the shared randomness between variables, joint entropy helps identify highly informative feature combinations, improving model performance. In decision trees and classification tasks, minimizing joint entropy ensures that splits reduce uncertainty more effectively, leading to better feature selection and enhanced predictive accuracy.