Behind every AI-assisted marvel is a tech stack, a combination of frameworks, tools, algorithms, and cloud services that brings products to life. Consider them as the brain, muscle, and fuel of AI. Without the right tech stack, AI models are a few fancy codes collecting dust and even some bugs, too! Have you ever considered what’s common between Netflix’s recommendation engine and Tesla’s self-driving car? Well, it’s a rock-solid AI tech stack that powers all these.

From Fortune 500 companies to enterprises, businesses are investing heavily in AI model development platforms. To give you a figure, Artificial Intelligence spending is projected to hit $407 billion by 2027. But with a barrage of tools like TensorFlow, PyTorch, and OpenCV, where do you even begin? And how do you pick the right mix for NLP, computer vision, or predictive analytics? Don’t sweat it; we’re breaking it all down.

In this guide, we will provide everything you need to know about the AI technology stack. Whether you’re a startup founder or a CTO scaling up, we’ll show you how to build (or fix) your AI solution. So, let’s get started by understanding the concept first.

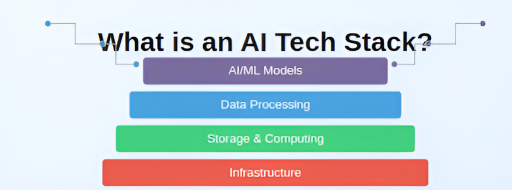

What is an AI Tech Stack?

An artificial intelligence tech stack is the core collection of tools, technologies, and infrastructure required to design, develop, and manage AI systems. It is the central architecture of AI projects, enabling developers and businesses to optimize operations and provide cognitive solutions.

AI tools and technologies encompass various AI development operations, from data processing and model creation to deployment and monitoring. It ensures competence, efficacy, and adaptability and provides a methodical approach to integrating AI capabilities into applications.

Understanding AI Tech Stack Layers

The AI tech stack is a structural configuration consisting of interconnected layers, each crucial to the system’s effectiveness. Let’s explore the core components of AI software architecture in more detail below—

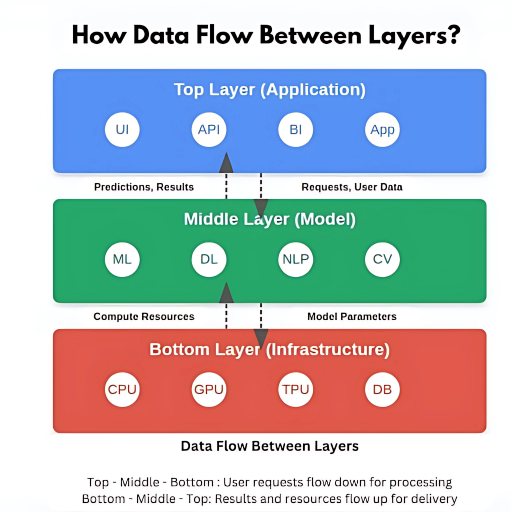

Application Layer

The topmost layer is the application layer, which physically represents the user experience.

- It covers everything from REST APIs controlling client-side and server-side data flow to web applications.

- This layer handles essential tasks, including gathering input using GUIs, displaying visualizations using dashboards, and delivering data-driven insights using API endpoints.

- Django for the backend and React for the front end are often used in this layer due to their distinct benefits in tasks like data validation, user authentication, and routing API.

Model Layer

The model layer powers data processing and decision-making. It acts as a mediator, taking in information from the application layer, performing computationally demanding tasks, and then sending back the insights for display or action.

- Specialized AI frameworks and libraries like TensorFlow and PyTorch offer various tools for machine learning tasks, such as computer vision, predictive analytics, and natural language processing services.

- This layer includes hyperparameter optimization, model training, and feature engineering.

Infrastructure Layer

Both model training and inference depend heavily on the infrastructure layer. CPUs, GPUs, and TPUs are among the computational resources allocated and managed by this layer.

- To increase scalability, latency, and fault tolerance, this layer uses orchestration technologies such as Kubernetes for container management.

- Services like AWS’s EC2 instances and Azure’s AI-specific accelerators can manage the demanding computation on the cloud side.

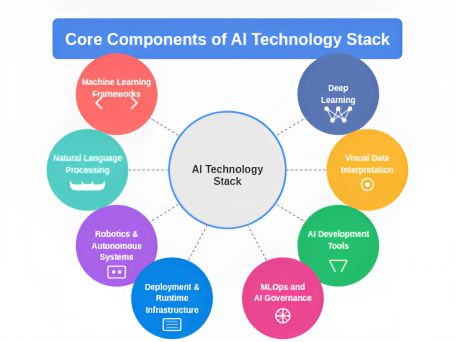

Core Components of AI Technology Stack

AI architecture design comprises several modules, each focusing on a different task but is coupled to work as a whole. The essential components of the artificial intelligence technology stack are made up of the following components:

1. Machine Learning Frameworks

It propels AI development technology stack. These frameworks are essential to the AI tech stack because they include pre-built tools, extensive libraries, and scalability, enabling teams to develop, train, and deploy models effectively.

Here’s a table with the five most used machine learning frameworks:

| Framework | Description | Best For |

| TensorFlow | Google’s open-source ML framework | AI research, production models |

| PyTorch | Meta’s deep learning framework. | Research, neural networks |

| Scikit-Learn | Python ML library | Classical ML, data science. |

| XGBoost | Gradient boosting framework | Predictive modeling, competitions. |

| Keras | High-level API for deep learning. | Quick prototyping, deep learning. |

2. Deep Learning

Deep learning frameworks such as TensorFlow, PyTorch, Keras, and others can be used for this. These frameworks make it easier to construct and train complex neural network topologies, such as recurrent neural networks (RNNs) for sequential data processing and convolutional neural networks (CNNs) for image recognition.

3. Natural Language Processing

NLP libraries, such as NLTK and spaCy, are the foundation for understanding human language. Transformer-based models, like GPT-4 or BERT, offer more understanding and context recognition for sophisticated applications like sentiment analysis. These NLP tools and models are added to the AI development stack after the deep learning components.

4. Visual Data Interpretation

OpenCV and other computer vision technologies are crucial in visual data. CNNs may be used for various challenging tasks, including facial recognition and object identification. Apart from that, machine learning development services enable multi-modal information processing.

5. Robotics and Autonomous Systems

Robotics and autonomous systems are examples of physical applications that employ sensor fusion techniques. Decision-making techniques like Monte Carlo Tree Search (MCTS) and Simultaneous Localization and Mapping (SLAM) are employed. Together with the machine learning tech stack and computer vision components, these features enable the AI to interact with its surroundings.

6. AI Development Tools

AI development tools and frameworks are essential for creating, evaluating, and working together on AI projects. These tools improve productivity by streamlining workflows. Here are the fundamental tools—

-

Integrated Development Environments (IDEs)

Well-known IDEs like PyCharm and Jupyter Notebook make writing and debugging code for AI-based custom app development easier.

-

No-Code and Low-Code Platforms

DataRobot and H2O.ai are AI software development tools that democratize access to AI capabilities by allowing non-technical people to create AI models.

-

Platforms for Tracking Experiments

Programs such as MLflow and Weights & Biases assist groups in organizing and monitoring experiments, guaranteeing efficiency and repeatability.

7. Deployment and Runtime Infrastructure

AI models can function efficiently in commercial settings thanks to the deployment and runtime infrastructure. Essential elements consist of:

-

Cloud Providers

AWS, Azure, and GCP provide AI-specific scalable computing and storage solutions.

-

Containerization

Consistent deployment across environments is made possible by AI development tools like Docker and Kubernetes, which provide smooth scaling.

-

Edge AI Deployment

Using models on edge devices improves real-time decision-making and lowers latency.

Whether in the cloud, on-premises, or at the edge, dependable performance from AI models is ensured via efficient deployment infrastructure.

8. MLOps and AI Governance

To manage the lifespan of AI model development and guarantee their ethical and legal usage, MLOps and AI governance are essential. Here are the key components of it—

-

Model Training Pipelines

Model training pipeline automation increases productivity and lowers update mistakes.

-

Version Control for Models

Programs such as DVC (Data Version Control) guarantee repeatability and monitor model modifications over time.

-

Bias Detection, Auditing, and Compliance

Frameworks such as IBM AI Fairness 360 assist in detecting and reducing biases, guaranteeing moral AI practices.

The foundation for operationalizing AI at scale while upholding compliance and transparency is provided by MLOps and governance frameworks.

Here’s a table so that you can skim through the above-mentioned AI tech stack—

| Category | Key Components |

| Deep Learning | TensorFlow, PyTorch, Keras, RNNs, CNNs |

| Natural Language Processing | NLTK, spaCy, GPT-4, BERT |

| Visual Data Interpretation | OpenCV, CNNs |

| Robotics & Autonomous Systems | MCTS, SLAM, Sensor Fusion |

| AI Development Tools | PyCharm, Jupyter Notebook, DataRobot, H2O.ai, MLflow, Weights & Biases |

| Deployment & Runtime Infrastructure | AWS, Azure, GCP, Docker, Kubernetes, Edge AI |

| MLOps & AI Governance | Automated Training Pipelines, DVC (Data Version Control), IBM AI Fairness 360 |

Phases of Advanced Tech Stack for AI

Building, implementing, and expanding AI systems requires a rigorous methodology. Let’s examine each stage to determine the significance of each layer—

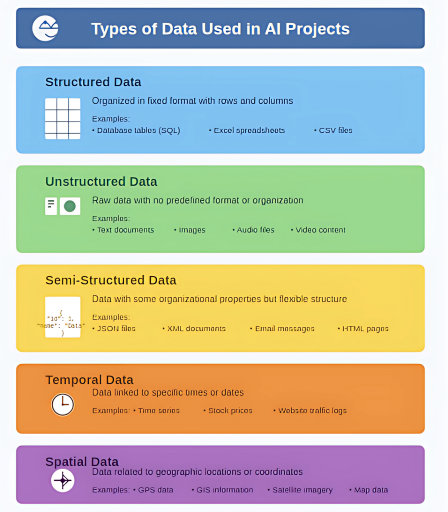

Phase 1: Data Management Infrastructure

Data management infrastructure is crucial for collecting, enhancing, and making data usable. This phase is divided into sections focusing on data handling, collection, storage, processing, and transformation.

Stage 1: Data Acquisition

Any AI application’s foundation is data. An AI technology stack needs a strong infrastructure for efficient data management in order to extract valuable insights:

-

Data Aggregation

Collects and compiles data from multiple sources for analysis, reporting, and insights. The data can be collected from various sources, which include—

Stage 2: Data Transformation and Storage

Data storage solutions securely store, manage, and retrieve digital data using cloud AI platforms, on-premise, or hybrid systems.

ETL (Extract, Transform, Load): It is a data processing procedure that gathers data from sources, transforms it into usable forms, and then loads it into storage systems to be analyzed.

Databases: Databases store, organize, and manage data efficiently, enabling quick retrieval, updates, and secure access.

Data Lakes: Store vast raw, unstructured data from various sources, enabling flexible data analytics and processing.

Data Warehouses: Store structured, processed data optimized for fast queries, reporting, and business intelligence insights.

Stage 3: Data Processing

Converts raw data into meaningful insights through techniques like cleansing, transformation, and analysis.

Data Annotation: The gathered data is labeled, which is necessary for supervised machine learning.

Despite the abundance of data accessible, gaps still exist, especially in some instances. Synthetic data mimics real-world data patterns. It is used for training AI models, testing applications, and ensuring privacy without using actual data.

Streaming: Real-time data analysis for applications requiring prompt insights.

Batch Processing: Processes large data sets in scheduled batches, ensuring efficiency for complex computations and reporting.

Stage 4: Data Versioning and Lineage

Data Version Control (DVC) is a tool that works with a wide range of storage formats and is independent of technology. Regarding lineage, systems like Pachyderm provide data versioning and a comprehensive depiction of data history, culminating in a coherent data narrative.

Stage 5: Data Surveillance

Censius and other automated monitoring systems help maintain data quality by identifying discrepancies, such as missing values, type conflicts, and statistical aberrations.

| Stage | Key Components |

| Data Aggregation, Internal Data, External Data, Open Data |

| ETL (Extract, Transform, Load), Databases, Data Lakes, Data Warehouses |

| Data Annotation, Synthetic Data Generation, Streaming, Batch Processing |

| Data Version Control (DVC), Pachyderm |

| Censius, Fiddler, Grafana |

Phase 2: Model Architecture and Tracking

In AI and machine learning, modeling is a continuous process that entails several advancements and evaluations. It begins after the information has been gathered, appropriately stored, analyzed, and transformed into valuable attributes.

-

Algorithm Selection

TensorFlow, PyTorch, Scikit-learn, and MXNET are examples of machine learning libraries. As soon as a library satisfies the project requirements, the standard processes of model selection, parameter adjustment, and iterative experimentation can start.

-

Development Ecosystem

The integrated development environment (IDE) simplifies AI software development. It optimizes the development workflow by combining essential elements, including code editors, compilation processes, debugging tools, and more. PyCharm is notable for its ease of managing code linking and dependencies.

Visual Studio Code is another trustworthy IDE that works well across operating systems and integrates with third-party tools like PyLint and Node.js. Other IDEs, such as Jupyter and Spyder, are primarily utilized during the prototype stage.

-

Model Tracking

It involves monitoring model performance, versions, and metrics using tools like MLflow, Weights & Biases, or TensorBoard. It ensures reproducibility, performance optimization, and efficient model management throughout the lifecycle. This provides a collaborative environment for businesses wishing to start a robust AI tech stack for machine learning projects.

| Phase | Key Components |

| Algorithm Selection | TensorFlow, PyTorch, Scikit-learn, MXNET |

| Development Ecosystem | PyCharm, Visual Studio Code, Jupyter, Spyder, MATLAB |

| Model Tracking | MLFlow, Neptune, Weights & Biases |

Criteria for Choosing an Artificial Intelligence Tech Stack

Selecting an AI technology stack requires careful consideration of the project’s technical requirements and features. Furthermore, programming languages and frameworks used are the primary factors of the generative AI tech stack that require assessments:

1. Technical Specifications and Functionality

Technical specifications and features of an AI tech stack define its frameworks, computing power, scalability, interoperability, security, and deployment efficiency. Let’s see some crucial aspects that determine your selection criteria.

-

Data Formats & Modality

The algorithmic method depends on whether the AI system will produce text, audio, or photos. Recurrent Neural Networks (RNNs) or Long Short-Term Memory (LSTMs) are used for textual or auditory data, while Generative Adversarial Networks (GANs) can also be used for visual aspects.

-

Computational Complexity

Due to factors such as the volume of data, neural layers, and inputs, a strong hardware architecture is required. This may require GPUs and frameworks like TensorFlow or PyTorch.

-

Scalability Demands

Cloud-based infrastructures like AWS, Google Cloud Platform, or Azure become essential in situations that require enormous computing flexibility, such as creating data variants or supporting many users.

-

Model Accuracy & Precision

Highly accurate generative approaches such as RNNs or Variational Autoencoders (VAEs) are prioritized for crucial applications such as autonomous navigation.

-

Execution Speed & Latency

Optimization techniques to speed up model inference are essential for real-time applications like AI chatbot development or video streaming.

2. Competency and Assets

The AI integration services team’s resources and skill set are crucial when selecting an AI stack. Strategic decision-making is also necessary to avoid steep learning curves obstructing advancement. A few things to think about in this area are:

-

Team Expertise & Skills

Match the stack to the team’s proficiency in particular languages or frameworks to speed development.

-

Hardware & Compute Power

Consider more sophisticated computational frameworks if Graphics Processing Units (GPUs) or other specialized devices are available.

-

Support Ecosystem

Verify that the selected technology stack has thorough instructions, tutorials, and a community to help navigate roadblocks.

-

Budget & Cost Optimization

A cost-effective yet capable technological stack may be required due to budgetary restrictions.

-

Complexity & System Upkeep

Reliable community or vendor support should make post-deployment upgrades and maintenance simple.

| Category | Key Components |

| Data Formats & Modality | RNNs, LSTMs (Textual/Auditory Data), GANs (Visual Data) |

| Computational Complexity | GPUs, TensorFlow, PyTorch |

| Scalability Demands | AWS, Google Cloud Platform, Azure |

| Model Accuracy & Precision | RNNs, Variational Autoencoders (VAEs) |

| Execution Speed & Latency | Conversational bots, Video streaming |

3. System Scalability

The lifespan and flexibility of a system are directly impacted by its scalability. Crucial factors to take into account are:

-

Data Volume

Large datasets may require effective manipulation of distributed computing frameworks such as Apache Spark.

-

User Traffic

Architectures that can handle large requests are necessary for high user concurrency and may require cloud-based or microservices designs.

-

Real-Time Processing

Performance-optimized or lightweight models should be chosen based on the need for instantaneous data processing.

-

Batch Operations

For effective data intelligence and AI solutions, distributed computing frameworks may be advantageous for systems that need high-throughput batch operations.

4. Information Security and Compliance

A secure data environment is essential, especially when working with financial or sensitive data. Important security factors to take into account are:

-

Data Integrity

Use an AI infrastructure stack with strong encryption, role-based access, and data masking to prevent unwanted data tampering.

-

Model Security

Since AI models are priceless intellectual property, they must include safeguards against illegal access and manipulation.

-

Infrastructure Security

To strengthen the operational infrastructure, intrusion detection systems, firewalls, and other cybersecurity solutions are required.

-

Regulatory Compliance

The modern AI tech stack must adhere to industry-specific requirements like HIPAA or PCI-DSS, depending on the sector, such as healthcare or finance.

-

Authentication & Access Control

Only authorized individuals should interact with the system and its data. You can use robust user authentication and authorization procedures.

Types of Artificial Intelligence Technology Stack

Various use cases and goals require customized strategies. Let’s examine the primary categories and distinguishing features of AI technology stacks.

Lean AI Tech Stack for Startups

Startups frequently have limited resources and must prioritize quick development. Cost-effectiveness and simplicity are prioritized in the lean AI technology stack for startups. For instance:

Tools: Open-source or free frameworks such as Scikit-learn or PyTorch.

Infrastructure: Pay-as-you-go cloud computing systems like Google Cloud and AWS.

Areas of Focus: AutoML tools or pre-trained models to reduce development time.

| Category | Tools and Frameworks |

| Tools | Scikit-learn, PyTorch |

| Infrastructure/Cloud | Google Cloud, AWS |

| Areas of Focus | AutoML tools, Pre-trained models |

| Data Storage | Firebase, PostgreSQL |

| Model Deployment | FastAPI, TensorFlow Serving |

| Experiment Tracking | MLflow, Weights & Biases |

| Edge AI | TensorFlow Lite, ONNX |

Generative AI Tech Stack

The generative AI stack aims to build models that can generate original material, including writing, pictures, or music. These gen AI tech stacks are essential for fostering innovation in content creation, automation, and the creative industries.

- Transformer models, such as BERT and GPT, are among the fundamental elements.

- These models are excellent at comprehending and producing human-like information,

- They propel improvements in generative tasks and natural language processing.

Datasets: Another essential component is datasets. High-quality, diversified datasets are crucial to generative AI development. Carefully selected and labeled datasets provide the context and depth required to train complex models.

- Creating and implementing generative AI solutions is further simplified by AI tools for software development.

- Developers may effectively refine or include generative capabilities using pre-trained models and APIs provided by platforms like Hugging Face Transformers and OpenAI API.

Enterprise AI Tech Stack

Large organizations have specific demands, and enterprise AI tech stacks are made to fulfill those needs. Scalability, customization, and interaction with current systems are prioritized in these stacks. Important traits consist of:

Tailored Solutions: Businesses frequently need AI solutions tailored to their goals and operations. It is typical to design and integrate custom models.

Scalability and Customization: Enterprise AI stacks must manage extensive operations while being flexible enough to meet changing business requirements.

MLOps Integration: Long-term success in MLOps Integration requires strong model deployment, monitoring, and retraining capabilities.

| Stack Category | Tools and Frameworks |

| Generative AI Models | BERT, GPT, DALL·E, Stable Diffusion |

| Data Management | Snowflake, Apache Hadoop, Google BigQuery |

| Compute & Infrastructure | AWS AI/ML, Azure AI, Google Cloud AI |

| Development Frameworks | TensorFlow, PyTorch, Hugging Face Transformers |

| MLOps & Automation | Kubeflow, MLflow, Databricks |

| Security & Compliance | IBM AI Fairness 360, AI Explainability 360, GDPR Compliance Tools |

| Edge AI & Deployment | NVIDIA Jetson, TensorFlow Serving, ONNX |

| API & Integration | OpenAI API, Google Vertex AI, Amazon Bedrock |

Domain-Specific AI Tech Stacks

Depending on their particular problems, several businesses need highly specialized tech stack for AI. Some examples are as follows:

Healthcare

AI tech stack for healthcare enables applications like patient management systems and diagnostic tools while emphasizing data protection and regulatory compliance.

Finance

Real-time processing and strong security are key components of the AI stack for fintech solutions in algorithmic trading and fraud detection.

Retail

AI tech stack for eCommerce platforms is built for scalability, and consumer insights fuel inventory optimization programs and personalization engines.

Manufacturing

AI software stack in manufacturing improves predictive maintenance, quality control, and robotic automation, optimizing production efficiency and reducing operational downtime.

How to Measure AI Tech Stack Efficiency?

Measuring AI tech stack efficiency involves evaluating model performance, scalability, resource utilization, and deployment speed. By establishing measurements, you may be confident that your IT stack provides quantifiable benefits.

Model Accuracy and Performance

Measures like accuracy, recall, and F1 scores are used to assess the performance of models. Routine testing ensures accuracy over time.

Scalability and Reliability

Examine the stack’s capacity to sustain uptime and manage growing workloads. Long-term flexibility is guaranteed via scalability.

Cost Efficiency

Monitor return on investment by contrasting company results with operating expenses. Use inexpensive tools wherever possible.

Time-to-Market

Calculate the speed at which your group can create and implement AI solutions. A simplified tech stack accelerates innovation.

Challenges in Implementing an AI Tech Stack

Organizations must overcome several obstacles to guarantee that their stack operates efficiently and sustainably. Below are the main challenges in overseeing an AI technology stack.

Complexity and Interoperability

AI tech stacks include data infrastructure, ML frameworks, programming tools, and deployment platforms. It is challenging to ensure that these parts function as a unit. Conflicting tool upgrades, compatibility limitations, and integration problems can all lead to delays and inefficiencies.

Organizations must address this by ensuring interoperability through frequent upgrades and a well-designed architecture. Another way to reduce complexity is to use modular tools with strong APIs.

Managing Large-Scale Data

The amount and diversity of data required for AI applications might be daunting. Large-scale dataset processing, analysis, and storage require specialized tools and a strong infrastructure. Furthermore, ensuring data consistency and quality across several sources is difficult.

Organizations must invest in scalable data solutions, such as cloud-based data lakes and data warehouses. Implementing strict data governance procedures and automated preprocessing may make large-scale data management more effortless.

Ethical and Regulatory Concerns

Concerns about ethics and regulations arise because AI systems frequently deal with sensitive data. Addressing concerns about bias, accountability, and transparency in AI models is crucial. Following data protection regulations like the CCPA and GDPR is also essential.

To overcome these obstacles, organizations must hire AI developers who embrace ethical AI practices, develop AI governance frameworks, and perform frequent audits for compliance and fairness. Tools such as IBM AI Fairness 360 can help identify and lessen biases.

Talent Gap in AI Development

To build and manage an AI tech stack, a team with various capabilities, including knowledge of data science, machine learning, generative AI tools for software development, and system integration, is needed. However, finding experts with in-depth knowledge of these fields is still tricky.

Organizations need to upskill their current workers, collaborate with academic institutions, or hire an AI consulting company that can handle intricate AI tech stacks to close this gap. They must also cultivate a culture of continual learning.

Latest Trends in Artificial Intelligence Tech Stack

AI tech stacks are evolving with trends like edge AI, low-code platforms, transformer models, AI automation, and ethical AI frameworks, enhancing efficiency, scalability, and responsible AI deployment across industries. Let’s look at some top AI tech stack trends—

AutoML (Automated Machine Learning)

The increasing use of automation in machine learning, or AutoML, streamlines the selection of models and their parameters. This allows more businesses to use AI without requiring extensive data science knowledge.

AI-as-a-service (AlaaS)

AI-as-a-Service is becoming more widely available as cloud platforms expand. It enables businesses to incorporate AI transformation services without making significant infrastructure investments.

AI-driven DevOps (AIOps)

With AI-powered tools and methodologies automating activities like code deployment, monitoring, and debugging, AI will become more and more critical in DevOps operations. AIOps, or the fusion of AI with DevOps, will increase system stability and optimize development processes.

AI in Cybersecurity

AI will be utilized increasingly to improve organizational security by predicting, detecting, and responding to cyber threats more quickly and precisely. This method facilitates a more comprehensive integration of AI across systems, increasing the security, scalability, and ease of AI installations.

AI in Quantum Computing

By 2025, AI technology stacks might start using early-stage quantum processors for complex problem-solving tasks and integrating quantum-resistant algorithms impact sectors like material sciences, logistics, and cryptography.

The Future of AI Tech Stacks

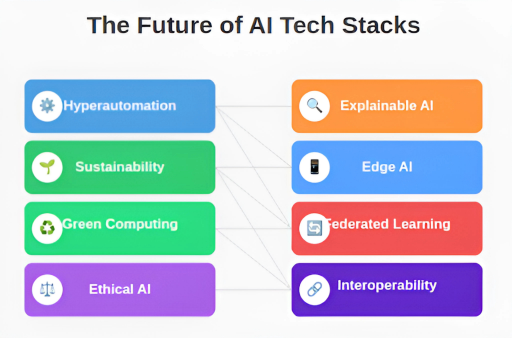

Advances in computational power, ethical AI practices, and global challenges will drive the development of AI technology stacks in innovative ways. Here are some emerging trends that will shape the future of AI stacks:

Hyperautomation and Autonomous AI Systems

Hyper-automation will transform sectors like agriculture and public services. AI tech stacks will seamlessly integrate robotic process automation (RPA), natural language processing (NLP), and the Internet of Things (IoT) to enable fully autonomous decision-making systems.

AI in Sustainability and Green Computing

Sustainability will be at the forefront of AI development. AI tech stacks will emphasize energy-efficient designs, such as hardware optimization and low-power models. AI will be used, for example, to control renewable energy networks and lower supply chain carbon footprints.

The broad adoption of environmentally friendly AI solutions will be fuelled by developments in green AI, which are backed by international efforts and legislation.

Ethical and Explainable AI

The need for accountability and transparency in AI systems will change the specifications for the AI tech stack. Explainable AI (XAI) frameworks, which ensure that models are comprehensible and compliant with ethical standards, will be a common feature. Organizations will use AI governance and auditing technologies, especially in high-stakes industries like healthcare and finance.

Edge AI and Federated Learning

The adoption of Edge AI, in which AI models are installed directly on edge devices, will be fuelled by the growth of IoT devices and the requirement for real-time processing. In this

Federated learning will facilitate cooperative learning across dispersed edge devices while protecting data privacy.

Interoperability and Standardization

As the AI ecosystem grows, interoperability and standardization across the many Gen AI tech stack components are becoming increasingly important. Efforts to standardize model structures, data formats, and APIs will make collaboration and integration easier.

Why SparxIT is an Ideal Partner for Your AI Development Needs?

We’ve provided a detailed guide on the AI tech stack, covering everything from data handling to deployment. Hope this helps you navigate the complexities and create innovative, resilient, impactful AI solutions. However, you can always consult with a top AI consulting company if you need any assistance.

SparxIT is a top AI development services provider with a long history of delivering innovation-intensive AI solutions. Our team comprises experts who are well-versed in the latest AI technologies and promote innovation. As a leading AI development firm, we help you choose the best AI tech stack for your needs, ensuring that every technology choice aligns with your project goals.

With SparxIT’s continuous support and maintenance, you can be sure that your AI systems are ready to take advantage of new opportunities and demands in the future. By selecting SparxIT as your AI development company, you invest in a partnership, prioritizing your sustained success and maximizing your return on investment.

Partner with Experts

Frequently Asked Questions

What are the latest trends in AI technology stacks?

AI tech stacks are evolving with trends like edge AI, multimodal learning, federated learning, and AutoML. Frameworks like TensorFlow, PyTorch, and ONNX are also gaining popularity for efficient model deployment.

Why is the AI tech stack important for AI development?

Your AI tech stack determines performance, scalability, and efficiency. The right tools streamline data processing, model training, and deployment while ensuring compatibility, flexibility, and security for seamless AI-driven innovation.

How do I choose the right AI tech stack for my project?

Focus on your project’s needs—data size, model complexity, scalability, and budget. Choose frameworks like TensorFlow or PyTorch and ensure that cloud, edge, or on-prem deployment aligns with your goals.

How do AI tech stacks support machine learning and deep learning applications?

AI tech stacks provide essential tools for data processing, model training, and inference. They optimize performance with GPUs, TPUs, and scalable frameworks, making ML and deep learning models more efficient and powerful.